1st International Workshop on Patterns and Practices of Reliable AI Engineering and Governance (AI-Pattern'24)

October 28th, 2024, in Tsukuba, Japan, co-located with the 35th IEEE International Symposium on Software Reliability Engineering (ISSRE 2024)

The tentative program can be viewed below.

AI-Pattern'24 was successfully over!

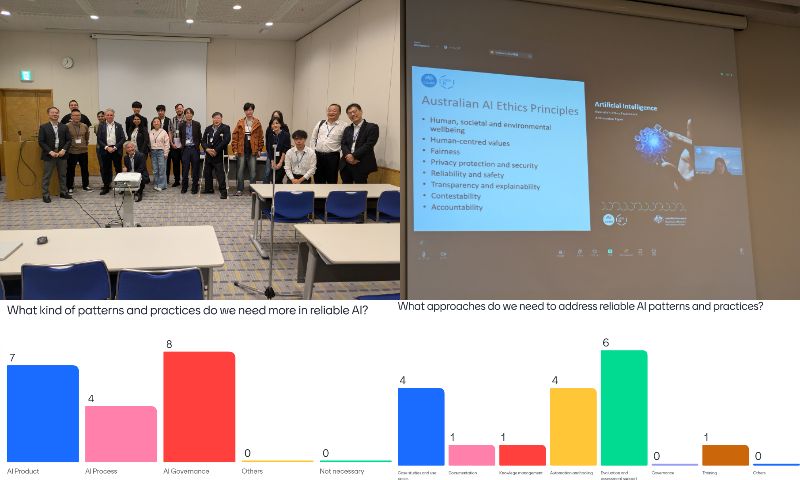

Thanks to the guest speaker Dr. Qinghua and paper authors, all organizers, supporters, and attendees, AI-Pattern'24 was successfully over! The workshop attracted around 25-30 attendes at peak. The opening slides with the voting results during the discussion are available here. Thank you all, and see you next time!

Outline and Goal

The popularity of artificial intelligence (AI), including machine learning (ML) techniques, has increased in recent years. AI is used in many domains, including cybersecurity, the Internet of Things, and autonomous cars, and is expanding its impact in scientific research, consumer assistants, and enterprise services through advancements in Generative AI (GenAI). Many works have investigated the mathematics and algorithms on which the AI techniques and models are built, but few have examined system engineering as well as their governance, which ensures AI systems are built, used, and managed to maximize benefits and prevent harms. AI engineering and governance needs to bring together diverse stakeholders across AI algorithms, data science, software/system engineering, compliance, legal, and business teams.

In AI software engineering and governance, there is often a gap between high-level abstract principles and low-level concrete tools and rules. Patterns encapsulating recurrent problems and corresponding solutions under particular contexts and pattern languages as organized and coherent patterns can fill such gaps, resulting in a common ``language'' for various stakeholders involved in often interdisciplinary AI software systems development and governance. Researchers and practitioners study best practices for engineering and governing reliable AI/ML systems to address issues in AI and ML techniques as well as processes, policies, and tools for trustworthy, responsible and safe AI system development and management. Such practices are often formalized as patterns and pattern languages. Major examples are

- AI architecture and design patterns, such as software engineering patterns for ML applications [Washizaki22], ML design patterns [Lackshmanan20] and agent design patterns [Liu24]

- AI assurance argument patterns, such as safety case patterns for ML systems [Wozniak20] and security argument patterns for DNN [Zeroual23][Mutsche24]

- Responsible AI engineering and governance patterns, such as patterns for creating trustworthy and safe AI systems [Lu23]

- AI development and management practices, such as lifecycle phase practices [Rahman23]

- Prompt engineering patterns such as prompt pattern catalogue and taxonomy [White23][Sasaki24]

While reliable AI engineering and governance patterns have been documented, there's still much to uncover in this landscape. This limited understanding hampers adoption, preventing the realization of their full potential. This workshop seeks to improve understanding of the theoretical, social, technological, and practical advances and issues related to patterns and practices in reliable AI engineering and governance. It will provide the opportunity to bring together researchers and practitioners and discuss the future prospects of this area. The workshop will have accepted position paper presentations to expose the latest research and practices in the area, as well as invited talks, discussions and panels, and collaborative activities.

This workshop is supported by JST MIRAI engineerable AI (eAI) project's framework team.

Program (subject to change)

Each accepted paper presentation will take 20 minutes, followed by a 10-minute discussion.

9:00-10:30 Session I

Opening

Invited talk: A Pattern-Oriented Approach for Engineering Safe and Responsible AI Systems

Qinghua Lu (Data61, CSIRO)

Abstract: The rapid evolution and widespread adoption of AI, particularly generative AI, have led to significant advancements in productivity and efficiency across various domains. However, the fast-growing capabilities and autonomy of AI also bring growing concerns about AI safety and responsible AI. Recent global initiatives have introduced standards and regulations on AI safety and responsible AI to guide the development and use of AI systems AI systems. Despite these efforts, these standards and regulations often remain abstract, making them difficult for practitioners to implement in real-world scenarios. On the other hand, significant efforts have been put on model-level solutions, which fail to capture system-level challenges, as AI models need to be integrated into software systems that are deployed and have real-world impact. To close the gap in operationalising responsible and safe AI, this talk presents a pattern-oriented approach to provide concrete guidance for engineering safe and responsible AI systems.

Biography: Dr Qinghua Lu is a principal research scientist and leads the Responsible AI science team at CSIRO’s Data61. She is the winner of the 2023 APAC Women in AI Trailblazer Award and is part of the OECD.AI’s trustworthy AI metrics project team. She received her PhD from University of New South Wales in 2013. Her current research interests include responsible AI, software engineering for AI, and software architecture. She has published 150+ papers in premier international journals and conferences. Her recent paper titled “Towards a Roadmap on Software Engineering for Responsible AI” received the ACM Distinguished Paper Award. Her new book, “Responsible AI: Best Practices for Creating Trustworthy AI Systems”, was published by Pearson Addison-Wesley in December 2023.

Toward Pattern-Oriented Machine Learning Reliability Argumentation

Takumi Ayukawa, Jati H. Husen, Nobukazu Yoshioka, Hironori Washizaki and Naoyasu Ubayashi (Waseda University)

11:00-12:30 Session II

A Process Pattern for Cybersecurity Assessment Automation: Experience and Futures

James Cusick (Ritsumeikan University)

Toward Extracting Learning Pattern: A Comparative Study of GPT-4o-mini and BERT Models in Predicting CVSS Base Vectors

Sho Isogai, Shinpei Ogata (Shinshu University), Yutaro Kashiwa (Nara Institute of Science and Technology), Satoshi Yazawa (Voice Research, Inc.), Kozo Okano (Shinshu University), Takao Okubo (Institute of Information Security), Hironori Washizaki (Waseda University)

Discussion

Closing Remarks

Call for Papers

We solicit contributions on the patterns, practices, and related topics in the area of reliable AI engineering and governance. Topics of interest include but are not limited to:

- Patterns and pattern languages of reliable AI engineering and governance, such as reliable AI architecture and design patterns, AI assurance argument patterns, responsible AI engineering and governance patterns, and reliable prompt engineering patterns

- Practices and experiences, such as reliable AI development and management practices and experience reports, industrial case studies and experiments

- Engineering techniques and tools for reliable AI patterns and practices, such as techniques and tools for pattern extraction, detection, application, verification, and organization

- Organizational and educational practices and experiences for AI engineering and governance to build, use, and manage reliable AI systems while maximizing benefits and preventing harms

Important Dates

- Paper submission due: August 11, 2024 (extended)

- Paper notification: September 1, 2024 (updated)

- Camera-ready submission: September 8, 2024 (updated)

- Workshop date: October 28, 2024

Paper Categories

- Full/research papers: 8 pages including references

- Short papers: 4 pages including references

- Position/new-idea papers: 2 pages including references

Paper Formatting and Submission

All submissions must adhere to the IEEE Computer Society Format Guidelines as implemented by the following LaTeX/Word templates:

- LaTeX Package (ZIP)

- Word Template (DOCX)

Paper submission will be done electronically through EasyChair, selecting International Workshop on Patterns and Practices of Reliable AI Engineering and Governance. Every paper submission will be peer-reviewed by reviewers. Emphasis will be given on originality, usefulness, practicality, and/or new problems to be tackled. Papers must have overall quality and not have been previously published or be currently submitted elsewhere. Accepted papers will be published in a supplemental volume of the ISSRE conference proceedings by the IEEE Computer Society, and will appear on IEEE Xplore. At least one author of each accepted paper registers for the ISSRE conference and presents the paper in-person at the workshop.

Organizing Committee

- Hironori Washizaki (Waseda University)

- Nobukazu Yoshioka (QAML Inc)

- Naoyasu Ubayashi (Waseda University)

- Emiliano Tramontana (Università di Catania)

Contact us at: aipattern2024 [at] easychair.org

Program Committee

- Shaukat Ali (Simula Research Laboratory)

- Qinghua Lu (Data61, CSIRO)

- Foutse Khomh (Polytechnique Montreal)

- Hironori Takeuchi (Musashi University)

- Ademar Aguiar (Universidade do Porto)

- Eduardo Guerra (Free University of Bolzen-Bolzano)

- Joseph Yoder (The Refactory)

- Raja Rao Budaraju (Oracle)

- Yu-Chin Cheng (National Taipei University of Technology)

- Kyle Brown (IBM)

- Shinpei Hayashi (Tokyo Institute of Technology)

- Takao Okubo (Institute of Information Security)

- Shinpei Ogata (Shinshu University)

- Yann-Gaël Guéhéneuc (Concordia University)

References

- [Washizaki22] H. Washizaki, et al. “Software Engineering Design Patterns for Machine Learning Applications,” IEEE Computer 55(3) 2022

- [Lackshmanan20] V. Lakshmanan, et al., “Machine Learning Design Patterns,” O’Reilly, 2020

- [Wozniak20] E. Wozniak, et al., “A Safety Case Pattern for Systems with Machine Learning Components,” SAFECOMP 2020 Workshop

- [Liu22] Liu, Y. et al. ‘Agent Design Pattern Catalogue: A Collection of Architectural Patterns for Foundation Model based Agents’. AIWare 2024.

- [Zeroual23] M. Zeroual, et al., “Security Argument patterns for Deep Neural Network Development,” PLoP 2023

- [Mutsche24] M. Mutsche, et al. “Robustness-based Security Case Verification for Deep Neural Networks,” AsianPLoP 2024

- [Lu23] Q. Lu, L. Zhu, J. Whittle, and X. Xu, “Responsible AI: Best Practices for Creating Trustworthy AI Systems,” Pearson Education, 2023

- [Rahman23] M. S. Rahman, et al., “Machine Learning Application Development: Practitioners’ Insights,” Software Quality Journal, 31, 2023

- [White23] J, White, et al., “A Prompt Pattern Catalog to Enhance Prompt Engineering with ChatGPT,” arXiv 2302.11382, 2023

- [Sasaki24] Y. Sasaki, et al., “A Taxonomy and Review of Prompt Engineering Patterns in Software Engineering,” COMPSAC 2024